In the case of pure language processing (NLP) and knowledge retrieval, the power to effectively and precisely retrieve related data is paramount. As the sphere continues to evolve, new strategies and methodologies are being developed to boost the efficiency of retrieval programs, notably within the context of Retrieval Augmented Technology (RAG). One such approach, generally known as two-stage retrieval with rerankers, has emerged as a strong answer to handle the inherent limitations of conventional retrieval strategies.

On this complete weblog put up, we’ll delve into the intricacies of two-stage retrieval and rerankers, exploring their underlying rules, implementation methods, and the advantages they provide in enhancing the accuracy and effectivity of RAG programs. We’ll additionally present sensible examples and code snippets as an instance the ideas and facilitate a deeper understanding of this cutting-edge approach.

Understanding Retrieval Augmented Technology (RAG)

Earlier than diving into the specifics of two-stage retrieval and rerankers, let’s briefly revisit the idea of Retrieval Augmented Technology (RAG). RAG is a way that extends the information and capabilities of huge language fashions (LLMs) by offering them with entry to exterior data sources, comparable to databases or doc collections. Refer extra from the article “A Deep Dive into Retrieval Augmented Technology in LLM“.

“RAFT: A Positive-Tuning and RAG Strategy to Area-Particular Query Answering” “A Full Information to Positive-Tuning Massive Language Fashions” “The Rise of Combination of Specialists for Environment friendly Massive Language Fashions” and “A Information to Mastering Massive Language Fashions”

The standard RAG course of entails the next steps:

- Question: A person poses a query or offers an instruction to the system.

- Retrieval: The system queries a vector database or doc assortment to search out data related to the person’s question.

- Augmentation: The retrieved data is mixed with the person’s authentic question or instruction.

- Technology: The language mannequin processes the augmented enter and generates a response, leveraging the exterior data to boost the accuracy and comprehensiveness of its output.

Whereas RAG has confirmed to be a strong approach, it isn’t with out its challenges. One of many key points lies within the retrieval stage, the place conventional retrieval strategies might fail to establish probably the most related paperwork, resulting in suboptimal or inaccurate responses from the language mannequin.

The Want for Two-Stage Retrieval and Rerankers

Conventional retrieval strategies, comparable to these primarily based on key phrase matching or vector house fashions, usually battle to seize the nuanced semantic relationships between queries and paperwork. This limitation may end up in the retrieval of paperwork which can be solely superficially related or miss essential data that might considerably enhance the standard of the generated response.

To handle this problem, researchers and practitioners have turned to two-stage retrieval with rerankers. This method entails a two-step course of:

- Preliminary Retrieval: Within the first stage, a comparatively giant set of doubtless related paperwork is retrieved utilizing a quick and environment friendly retrieval technique, comparable to a vector house mannequin or a keyword-based search.

- Reranking: Within the second stage, a extra refined reranking mannequin is employed to reorder the initially retrieved paperwork primarily based on their relevance to the question, successfully bringing probably the most related paperwork to the highest of the listing.

The reranking mannequin, usually a neural community or a transformer-based structure, is particularly educated to evaluate the relevance of a doc to a given question. By leveraging superior pure language understanding capabilities, the reranker can seize the semantic nuances and contextual relationships between the question and the paperwork, leading to a extra correct and related rating.

Advantages of Two-Stage Retrieval and Rerankers

The adoption of two-stage retrieval with rerankers provides a number of vital advantages within the context of RAG programs:

- Improved Accuracy: By reranking the initially retrieved paperwork and selling probably the most related ones to the highest, the system can present extra correct and exact data to the language mannequin, resulting in higher-quality generated responses.

- Mitigated Out-of-Area Points: Embedding fashions used for conventional retrieval are sometimes educated on general-purpose textual content corpora, which can not adequately seize domain-specific language and semantics. Reranking fashions, then again, will be educated on domain-specific knowledge, mitigating the “out-of-domain” downside and enhancing the relevance of retrieved paperwork inside specialised domains.

- Scalability: The 2-stage method permits for environment friendly scaling by leveraging quick and light-weight retrieval strategies within the preliminary stage, whereas reserving the extra computationally intensive reranking course of for a smaller subset of paperwork.

- Flexibility: Reranking fashions will be swapped or up to date independently of the preliminary retrieval technique, offering flexibility and adaptableness to the evolving wants of the system.

ColBERT: Environment friendly and Efficient Late Interplay

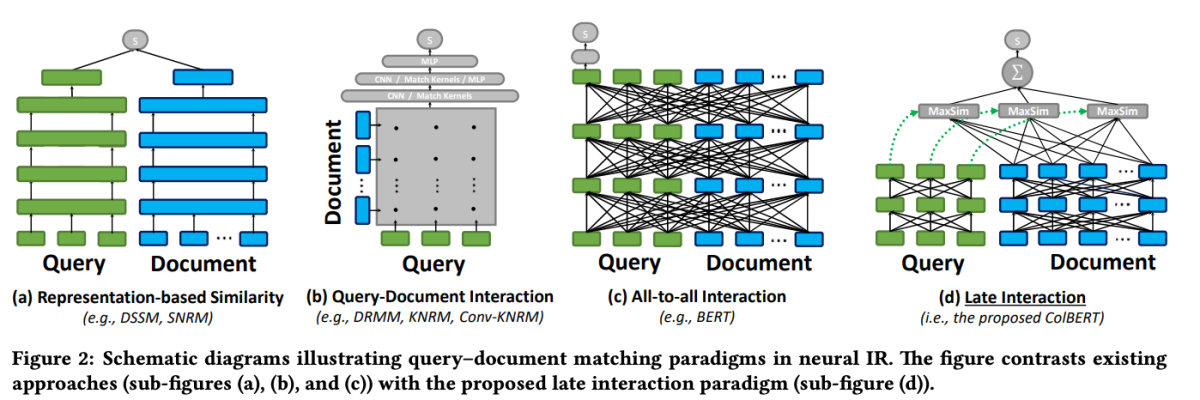

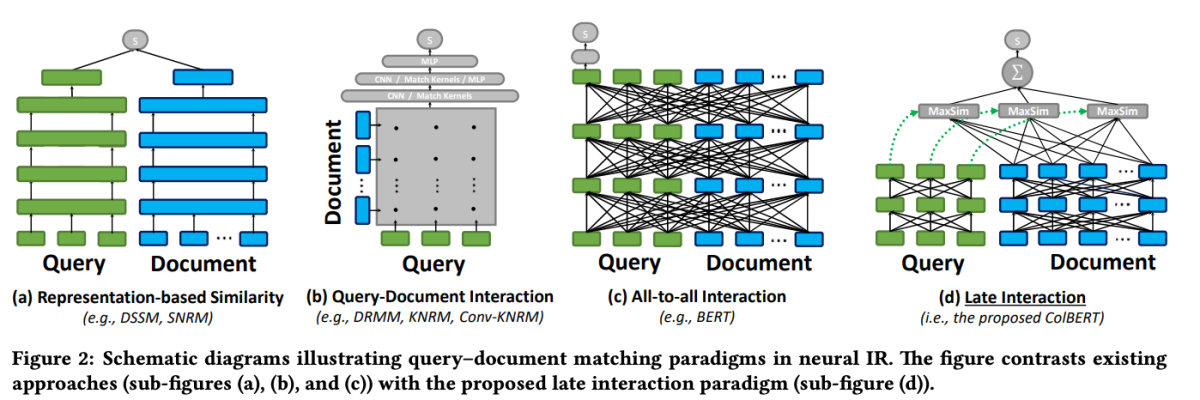

One of many standout fashions within the realm of reranking is ColBERT (Contextualized Late Interplay over BERT). ColBERT is a doc reranker mannequin that leverages the deep language understanding capabilities of BERT whereas introducing a novel interplay mechanism generally known as “late interplay.”

ColBERT: Environment friendly and Efficient Passage Search through Contextualized Late Interplay over BERT

The late interplay mechanism in ColBERT permits for environment friendly and exact retrieval by processing queries and paperwork individually till the ultimate phases of the retrieval course of. Particularly, ColBERT independently encodes the question and the doc utilizing BERT, after which employs a light-weight but highly effective interplay step that fashions their fine-grained similarity. By delaying however retaining this fine-grained interplay, ColBERT can leverage the expressiveness of deep language fashions whereas concurrently gaining the power to pre-compute doc representations offline, significantly dashing up question processing.

ColBERT’s late interplay structure provides a number of advantages, together with improved computational effectivity, scalability with doc assortment measurement, and sensible applicability for real-world situations. Moreover, ColBERT has been additional enhanced with strategies like denoised supervision and residual compression (in ColBERTv2), which refine the coaching course of and cut back the mannequin’s house footprint whereas sustaining excessive retrieval effectiveness.

This code snippet demonstrates tips on how to configure and use the jina-colbert-v1-en mannequin for indexing a set of paperwork, leveraging its potential to deal with lengthy contexts effectively.

Implementing Two-Stage Retrieval with Rerankers

Now that now we have an understanding of the rules behind two-stage retrieval and rerankers, let’s discover their sensible implementation inside the context of a RAG system. We’ll leverage well-liked libraries and frameworks to reveal the mixing of those strategies.

Organising the Setting

Earlier than we dive into the code, let’s arrange our improvement setting. We’ll be utilizing Python and a number of other well-liked NLP libraries, together with Hugging Face Transformers, Sentence Transformers, and LanceDB.

# Set up required libraries

!pip set up datasets huggingface_hub sentence_transformers lancedb

Information Preparation

For demonstration functions, we’ll use the “ai-arxiv-chunked” dataset from Hugging Face Datasets, which accommodates over 400 ArXiv papers on machine studying, pure language processing, and enormous language fashions.

</pre>

from datasets import load_dataset

dataset = load_dataset("jamescalam/ai-arxiv-chunked", break up="prepare")

<pre>

Subsequent, we’ll preprocess the information and break up it into smaller chunks to facilitate environment friendly retrieval and processing.

</pre>

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

def chunk_text(textual content, chunk_size=512, overlap=64):

tokens = tokenizer.encode(textual content, return_tensors="pt", truncation=True)

chunks = tokens.break up(chunk_size - overlap)

texts = [tokenizer.decode(chunk) for chunk in chunks]

return texts

chunked_data = []

for doc in dataset:

textual content = doc["chunk"]

chunked_texts = chunk_text(textual content)

chunked_data.lengthen(chunked_texts)

For the preliminary retrieval stage, we'll use a Sentence Transformer mannequin to encode our paperwork and queries into dense vector representations, after which carry out approximate nearest neighbor search utilizing a vector database like LanceDB.

from sentence_transformers import SentenceTransformer

from lancedb import lancedb

# Load Sentence Transformer mannequin

mannequin = SentenceTransformer('all-MiniLM-L6-v2')

# Create LanceDB vector retailer

db = lancedb.lancedb('/path/to/retailer')

db.create_collection('docs', vector_dimension=mannequin.get_sentence_embedding_dimension())

# Index paperwork

for textual content in chunked_data:

vector = mannequin.encode(textual content).tolist()

db.insert_document('docs', vector, textual content)

from sentence_transformers import SentenceTransformer

from lancedb import lancedb

# Load Sentence Transformer mannequin

mannequin = SentenceTransformer('all-MiniLM-L6-v2')

# Create LanceDB vector retailer

db = lancedb.lancedb('/path/to/retailer')

db.create_collection('docs', vector_dimension=mannequin.get_sentence_embedding_dimension())

# Index paperwork

for textual content in chunked_data:

vector = mannequin.encode(textual content).tolist()

db.insert_document('docs', vector, textual content)

With our paperwork listed, we will carry out the preliminary retrieval by discovering the closest neighbors to a given question vector.

</pre>

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

def chunk_text(textual content, chunk_size=512, overlap=64):

tokens = tokenizer.encode(textual content, return_tensors="pt", truncation=True)

chunks = tokens.break up(chunk_size - overlap)

texts = [tokenizer.decode(chunk) for chunk in chunks]

return texts

chunked_data = []

for doc in dataset:

textual content = doc["chunk"]

chunked_texts = chunk_text(textual content)

chunked_data.lengthen(chunked_texts)

<pre>

Reranking

After the preliminary retrieval, we’ll make use of a reranking mannequin to reorder the retrieved paperwork primarily based on their relevance to the question. On this instance, we’ll use the ColBERT reranker, a quick and correct transformer-based mannequin particularly designed for doc rating.

</pre>

from lancedb.rerankers import ColbertReranker

reranker = ColbertReranker()

# Rerank preliminary paperwork

reranked_docs = reranker.rerank(question, initial_docs)

<pre>

The reranked_docs listing now accommodates the paperwork reordered primarily based on their relevance to the question, as decided by the ColBERT reranker.

Augmentation and Technology

With the reranked and related paperwork in hand, we will proceed to the augmentation and technology phases of the RAG pipeline. We’ll use a language mannequin from the Hugging Face Transformers library to generate the ultimate response.

</pre>

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("t5-base")

mannequin = AutoModelForSeq2SeqLM.from_pretrained("t5-base")

# Increase question with reranked paperwork

augmented_query = question + " " + " ".be a part of(reranked_docs[:3])

# Generate response from language mannequin

input_ids = tokenizer.encode(augmented_query, return_tensors="pt")

output_ids = mannequin.generate(input_ids, max_length=500)

response = tokenizer.decode(output_ids[0], skip_special_tokens=True)

print(response)

<pre>